Responsible AI in healthcare: from compliance to competitive advantage

"An AI solution said 'no' surgery" - But Who's Accountable?

A well-known U.S. healthcare organization recently faced public backlash after an AI driven clinical decision support tool mistakenly denied a surgical procedure for a high-risk patient.

The algorithm, trained on outdated data, flagged the patient as a non-ideal candidate for the procedure. Although, after a manual review, the decision was reversed—but not before the damage was already done, along with eroding trust among clinicians and patients, and an internal audit was launched. This is not an isolated incident.

Healthcare leaders are waking up to the reality that AI missteps are no longer a technical glitch—they are governance failures. And in healthcare, every misstep is magnified because human lives are on the line.

Why responsible AI is now a boardroom priority?

AI is no longer a back-office tool. It's stepping into decision making roles in care delivery, diagnostics, and clinical and operational workflows. But the majority of the organizations who have implemented AI solutions still fail to approach AI governance proactively. Matter of fact, most AI governance approaches are reactive—with hopes of dealing with fallout after the fact. With this approach comes heightened ethical, legal, and reputational risks, and that's a recipe for disaster for both the vendor and its clients–payers, providers and life sciences.

“According to Gartner, by 2027, 50% of CIOs will fail to deliver the expected ROI on their AI budgets due to trust deficits.”

In healthcare, where clinicians and patients demand transparency, this trust gap can lead to delayed adoption, reputational damage, and regulatory scrutiny.

From risk to differentiator: a case study in ethical leadership

One mid-sized health system recently turned this challenge into a competitive edge. Facing pressures to deploy generative AI for patient engagement, their leadership resisted the allure of “move fast and automate.”

Instead, they instituted an AI Ethics Advisory Committee composed of clinical experts, leading industry advisors, legal experts, patient advocates, and IT experts to simulate different risk scenarios to ensure the appropriate and ethical outcomes. In other words, before every deployment, the Advisory committee will conduct story-driven use case reviews—narratives of how an AI decision impacts a clinician’s workflow, a patient’s experience, care journey and the organization’s accountability.

The outcome? Their AI initiatives not only met regulatory expectations but their AI adoption rates among clinicians increased by 40%. More importantly, patients reported a higher sense of trust in digital interactions. This is not an example of compliance exercise - but a strategic differentiator in today’s competitive world.

Responsible AI is not a policy—it’s a practice

Here’s a fact: Responsible AI (RAI) isn’t a document. It’s a discipline. It’s an operating principle that permeates every stage of AI adoption:

- Define Intent - Articulate your core ethical principles (transparency, fairness, accountability) and ensure leadership alignment.

- Operationalize - Bake these principles into AI design, training data, deployment processes, and vendor contracts.

- Monitor Continuously - AI models drift, biases creep in. Regular audits and feedback loops are non-negotiable.

- Own the Consequences - Establish clear escalation and corrective pathways when unintended AI outcomes occur.

Forward-thinking organizations are already moving beyond policy documents and some are already deploying "guardian agents" — AI entities designed to set guardrails and boundaries for other AI systems. Ethical deliberation is no longer a human-only activity; it’s becoming embedded into AI ecosystems.

The business case: trust as a growth multiplier

Responsible AI should not be seen as a deterrent to AI adoption–but a growth accelerator. Healthcare enterprises that actively manage AI ethics aren’t just avoiding risks–they’re accelerating adoption and innovation.

Data shows that:

- Digital workers with high AI trust are 2X more likely to become everyday users.

- Enterprises with AI ethics embedded in operations see 1.4X faster cross-functional AI adoption across business units.

For healthcare, this isn’t just about user acceptance; it’s about future-proofing your patient relationships and competitive positioning in an AI-first ecosystem. In the future, those health systems that have built AI trust will win the market share.

ZRG can support: from boardroom to bedside

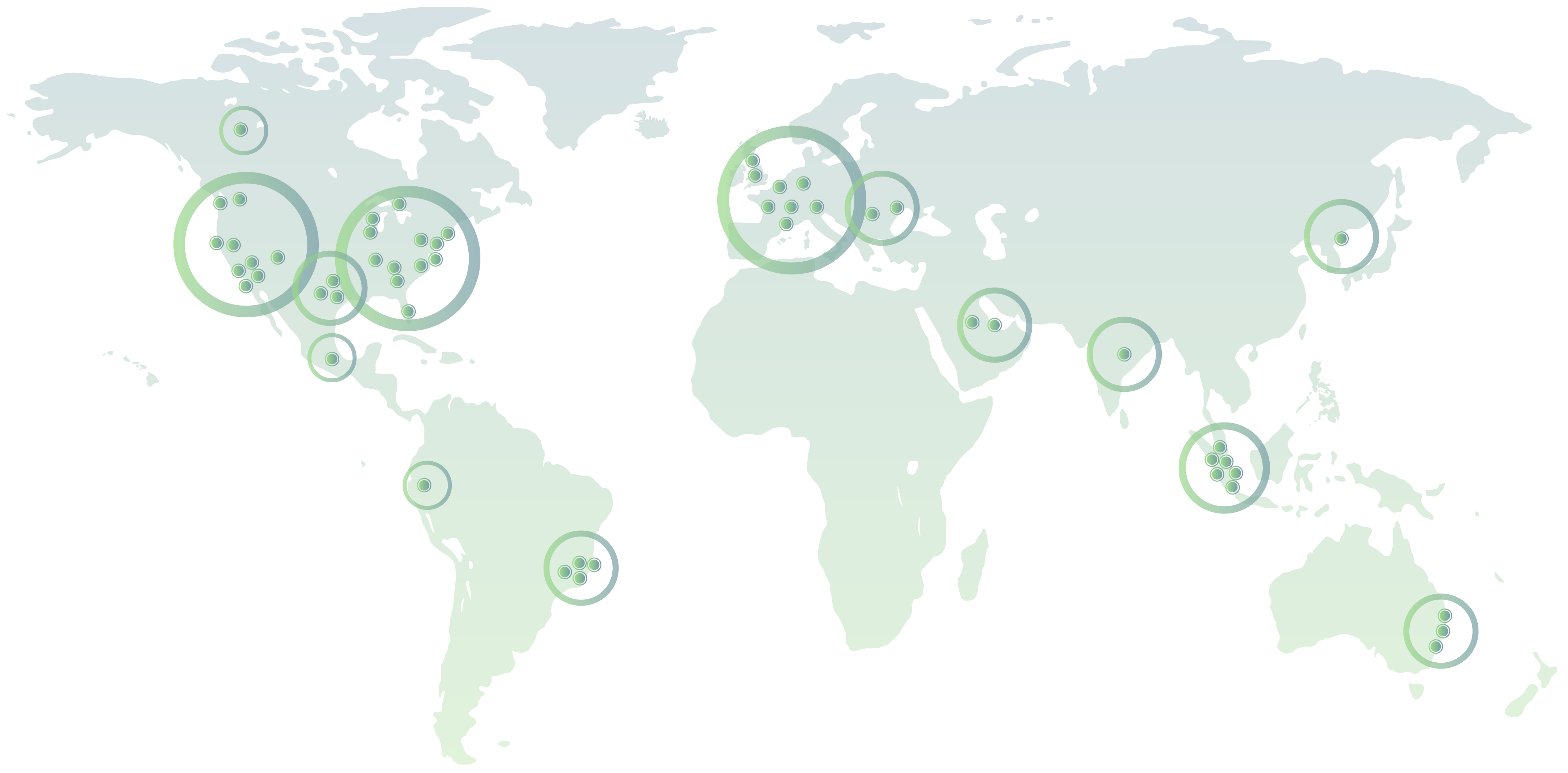

We specialize in helping organizations move from compliance-focused to value-driven Responsible AI strategies. Our strategic advisory and consulting services focus on:

- AI Ethics Readiness Assessment - Tailored for your organization's maturity.

- Setting Up AI Ethics & Governance Boards - Practical frameworks to operationalize ethical oversight.

- Use Case Deep-Dives & Storytelling Workshops - Translating abstract risks into real-world impacts for all stakeholders.

- Vendor Risk Management for AI Solutions - Ensuring third-party AI partners align with your ethical and regulatory expectations.

The conversation around Responsible AI is no longer optional (or just a compliance checkbox)–it’s a strategic imperative. The question is: Will your AI be a source of differentiation, or disillusionment?

Meet the Author